28 September 2023 • 10 minute read

New Zealand's Privacy Commissioner follows global trends with latest guidance on AI

Artificial Intelligence is the focus of emphatic public discussion. Some commentators focus on its transformational potential. Once exclusive to big tech, today AI underpins new business models, processes and solutions in every sector, and the potential to build competitive advantage seems limitless. Other observers are more critical and see AI-driven threats everywhere. Concerns over responsible AI have risen sharply, and global policymakers are rapidly formalising AI rules to mitigate societal and technical risks.

Against a global environment where AI's capability and adoption are far outpacing regulatory developments, the Office of the Privacy Commissioner (OPC) has become the first New Zealand regulator to release substantive guidance for businesses adopting AI-enabled solutions in New Zealand. The guidance is available here, and builds on the OPC’s guidance on generative AI tools, published in May 2023 (available here).

Although there is a fragmented global regulatory landscape governing AI, common themes can be identified, namely the importance of good governance, transparency and explainability, accuracy, robustness and security, accountability, and human values and fairness.

These key themes underpin the OPC's guidance, which is good news for multinational organisations seeking to develop governance frameworks that are consistent with the regulatory landscape in multiple jurisdictions.

This article looks at:

- key takeaways from the OPC's guidance;

- how the recommendations stack up against what's actually happening in the market, based on insights from DLA Piper's latest global report, AI Governance: Balancing Policy, Compliance, and Commercial Value; and

- what good governance looks like in practice.

What does the guidance say?

We like that the guidance focuses on a pragmatic application of the Privacy Act 2020's Information Privacy Principles (IPPs). Something overly prescriptive would have been inconsistent with the flexible, principles based framework established by the Privacy Act, and would have quickly become out of date, given the explosive rate at which AI is being developed and used by businesses in Aotearoa.

Key takeaways for organisations doing business in New Zealand are:

- Undertake a Privacy Impact Assessment: You are asking for trouble if you do not undertake a Privacy Impact Assessment (PIA) before deploying AI. PIAs are not mandatory under the Privacy Act, but they are generally seen as best practice. This is reiterated multiple times in the OPC's guidance, so if you have not done a PIA at the outset of an AI project, you are falling below the standards expected by the privacy regulator.

- Good governance is critical: Unsurprisingly, the guidance is clear that robust governance of AI development, deployment and use is fundamental to ensuring compliance with New Zealand's data protection framework. This requires involvement of senior leadership, and human intervention needs to be meaningful. AI governance is more than having a policy in place that talks broadly to AI ethics. See our thoughts below on what good AI governance looks like.

- The OPC defines AI broadly: The guidance defines AI as computer systems where one or more of the following applies:

- machine learning systems developed or refined by processing training data;

- classifier systems used to put information into categories (eg captioning images);

- interpreter systems that turn noisy input data into standardised outputs (eg deciding what words are present in speech or handwriting);

- generative systems used to create text, images, computer code, or something else; and/or

- automation where computers take on tasks that people have done up until recently.

- The guidance is consistent with key themes from international regulation: One of the key challenges facing businesses trying to develop AI strategies and governance processes is the lack of an objective universal standard for AI governance. Helpfully, the OPC's guidance is consistent with key themes we are seeing emerging in new laws, regulations and guidance around the world (ie the importance of transparency and explainability; accuracy; robustness and security; accountability; and human values and fairness). Also consistent with international regulation is the OPC's call for a 'privacy-by-design' approach to implementing AI. This is beneficial for multinational organisations seeking to develop and implement a global AI governance framework.

- Uniquely Aotearoa perspective: Although broadly consistent with the developing body of international regulation, the OPC's guidance recognises an important element which is unique to Aotearoa – the need to consider te ao Māori perspectives on privacy (broadly, te ao Māori is the Māori worldview including tikanga Māori - Māori customs and protocols). Specific concerns identified in the guidance include:

- bias from systems developed overseas that do not work accurately for Māori;

- collection of Māori information without work to build relationships of trust, leading to inaccurate representation of Māori taonga that fail to uphold tapu and tikanga; and

- exclusion from processes and decisions of building and adopting AI tools that affect Māori whānau, hapū, and iwi, including use of these tools by the public sector.

- High-risk use cases: consistent with the position taken overseas, the OPC sees some use cases for AI as higher-risk, requiring more care. A clear example is the use of AI tools for automated decision making. The potential for direct impacts on outcomes for individuals means organisations should develop processes for human review of decisions, and to empower and adequately resource the people doing this work.

What's happening in practice?

The theory in the OPC's guidance is sound, but how does this align with what we are seeing in the market?

We have considered the guidance in light of the insights from our report AI Governance: Balancing Policy, Compliance, and Commercial Value. The report explores the balance between governance and value creation, and how businesses can build and deploy AI responsibly, safely and commercially. It cuts through AI hype and gives a practical perspective on AI strategies, challenges, risks and governance.

Key insights relevant to the OPC's guidance show that businesses are aware that robust governance is important, but it is often overshadowed by commercial drivers:

- There is significant hype surrounding AI. 96% of companies have adopted AI in some way and 55% agree that AI is a key source of competitive advantage. But oversight is falling behind. 37% of businesses frequently begin AI projects without a governance framework, leading to project interruptions later. 40% believe that AI governance should not hold back progress.

- 99% of survey respondents ranked governance as a critical AI challenge. They struggle to define what good governance looks like in their organisation. Overseeing AI initiatives to ensure they remain within regulatory guidelines is also a top challenge for 96% of companies. We know many struggle to find the right capabilities and structures internally to exercise proper oversight. On this topic, we think the OPC may be understating the work required for effective governance – the OPC's guidance notes that "it doesn't need to be difficult" and "asking a few key questions and making some basic decisions about information governance can go a long way" – in our view, more is required (see below).

- Companies are hyper-aware of internal AI risk. 72% see their own use of AI, including that of employees, as their greatest threat, with particular concerns over data privacy and data ownership.

So what does good governance look like?

AI governance is more than having a policy in place that talks broadly to AI ethics. The growing power of AI demands governance regimes that include control systems, monitoring, measurement, feedback and oversight.

Data from our global report shows organisations have different views on how best to manage AI compliance. As leaders navigate a fragmented regulatory landscape and determine their risk appetite, there is unlikely to be a silver bullet for success. What's clear is that boards and senior leadership are on notice that AI is an issue that requires literacy, training and governance. Today the consensus is that AI is a separate compliance category, distinct from traditional privacy, cybersecurity and data programs.

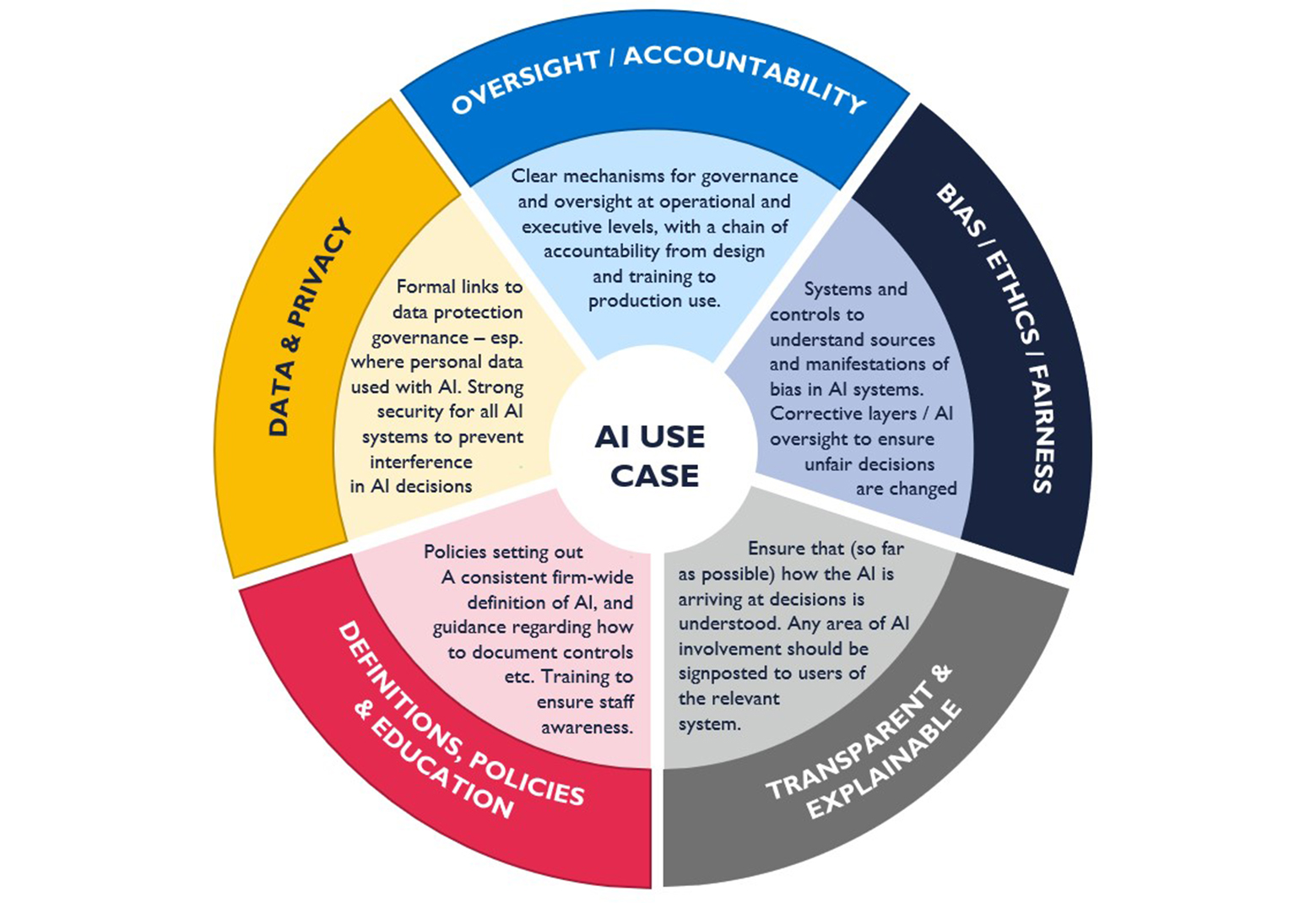

The core elements of good AI governance are shown below. In essence, it is critical to:

- Build knowledge: Promote understanding of the mechanics and limitations of AI from the top down. What should everyone know about AI? What should company leaders know? What are the problems you are targeting with AI? Where is value generated?

- Analyse risk: Gain a full picture of internal and external AI risk. Where is AI being deployed and how? What contractual warranties and mitigations are in place? Have you provided sufficient guidance to people and customers about data handling?

- Take a long-term view: Monitor and respond to the changing landscape. How is AI evolving? What innovations can you bring into your organisation? What are the implications of new tech for your AI governance framework?

- Align to values: Consider how organisational values should inform AI. What does responsible AI governance mean to your organisation? What ethical guardrails do you need to establish?

- Navigate AI partnerships: Manage partnerships and contracts with key AI risks in mind. Have you done your due diligence? Have you considered novel tender processes? Do you have relevant contractual protections on data and IP? Are service levels proactively managed?

- Establish oversight: Establish skilled oversight of AI to avoid ‘knee-jerk’ bans. Do legal and compliance teams have the technical information they need to be enablers? Do you have streamlined decision-making processes in place? Who is accountable for AI oversight?

- Engage with industry and regulators: Collaborate on standards and best practices. Are you up to speed on future regulation and how it will apply to your uses of AI? What actions are industry peers taking? Can industry bodies better champion your concerns?

Want to know more?

DLA Piper is a global leader in AI. We have developed tools and resources for our clients to assess their AI maturity and readiness to implement AI solutions, and keep up to date with the latest AI insights. If you want to know more, check out:

- the AI Scorebox – our free global tool to assess your organisation's AI maturity and compare to your sector peers;

- our dedicated EU AI Act app – where you can navigate, compare, bookmark, and download articles of the Act;

- AI Focus – where you can stay informed on AI developments and insights; and

- our AI and Employment Podcast series – discussing the key employment law issues facing employers arising out of AI.